Abstract

State-of-the-art models on contemporary 3D perception benchmarks like ScanNet consume and label dataset provided 3D point clouds, obtained through post processing of sensed multiview RGB-D images. They are typically trained in-domain, forego large-scale 2D pre-training

and outperform alternatives that featurize the posed RGBD multiview images instead. The gap in performance between methods that consume posed images versus postprocessed 3D point clouds has fueled the belief that 2D and 3D perception require distinct model architectures.

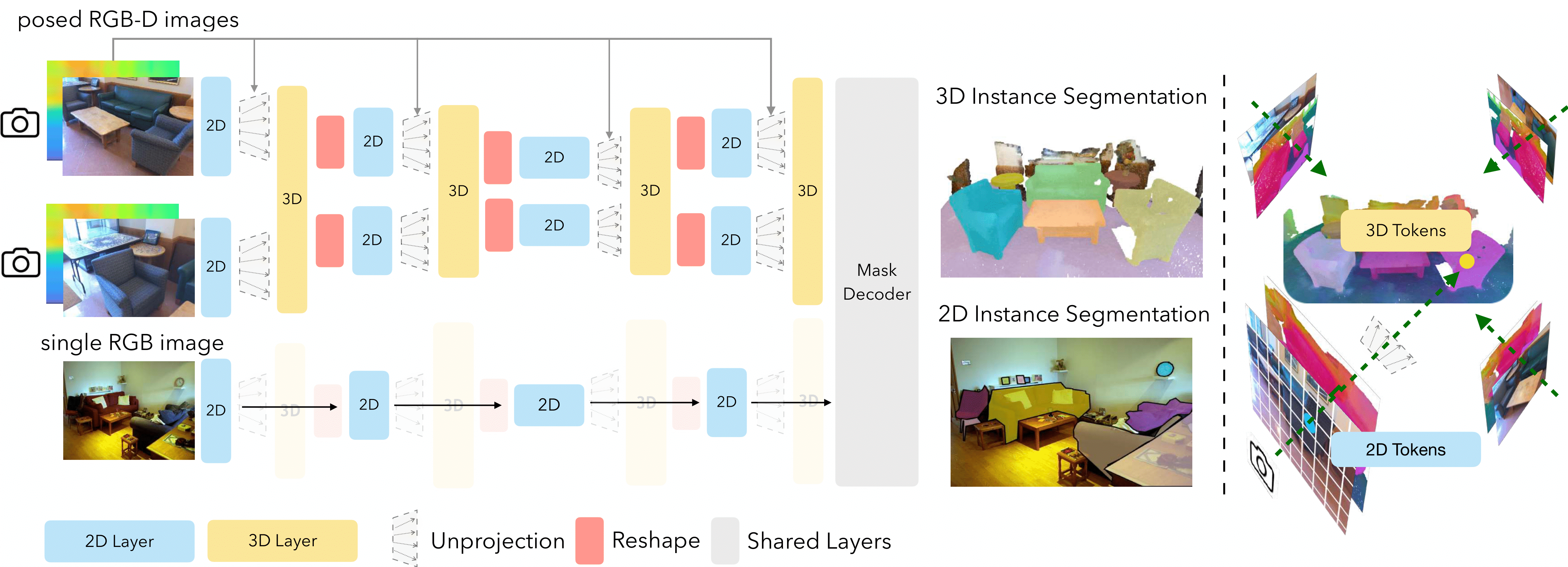

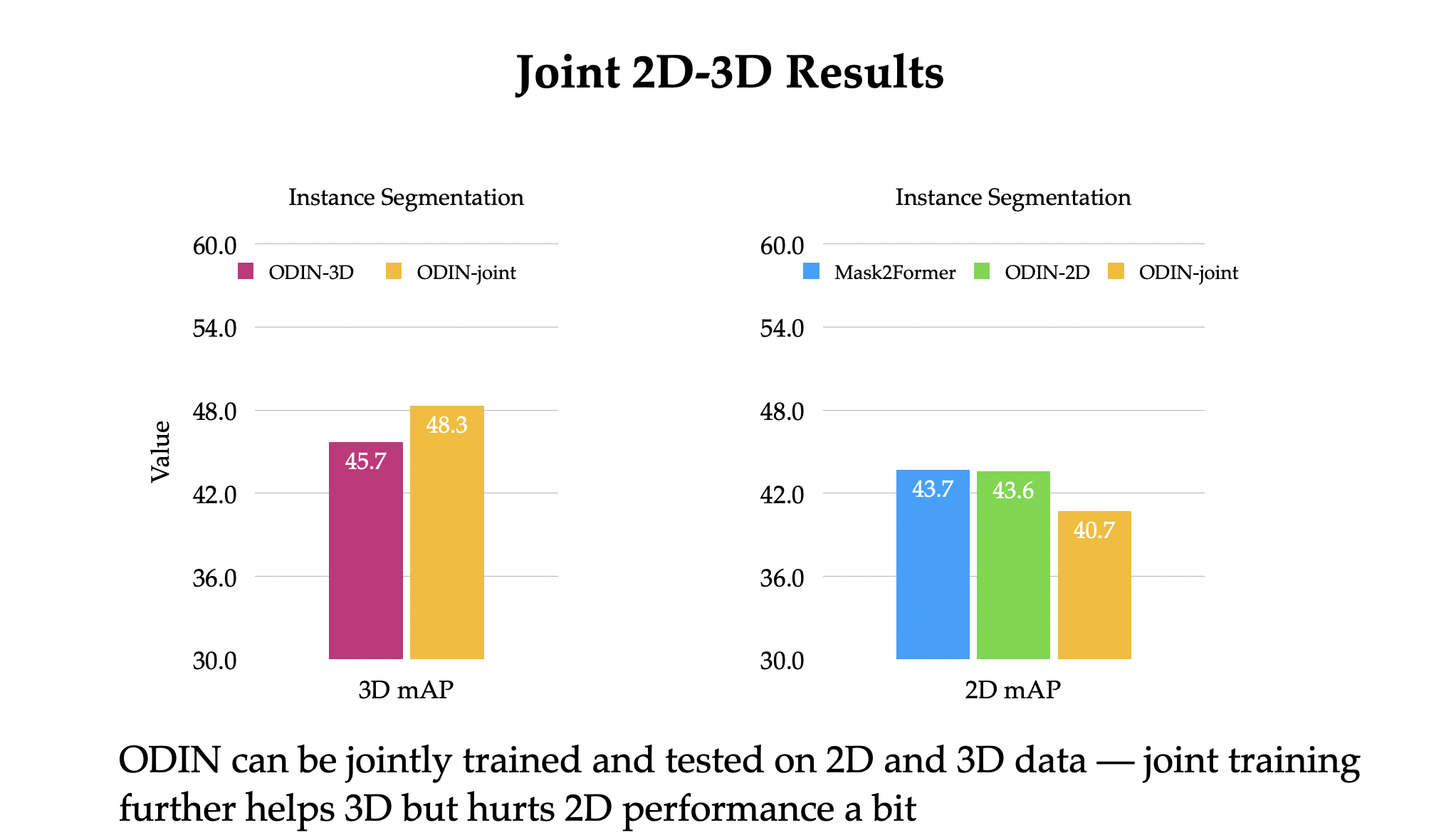

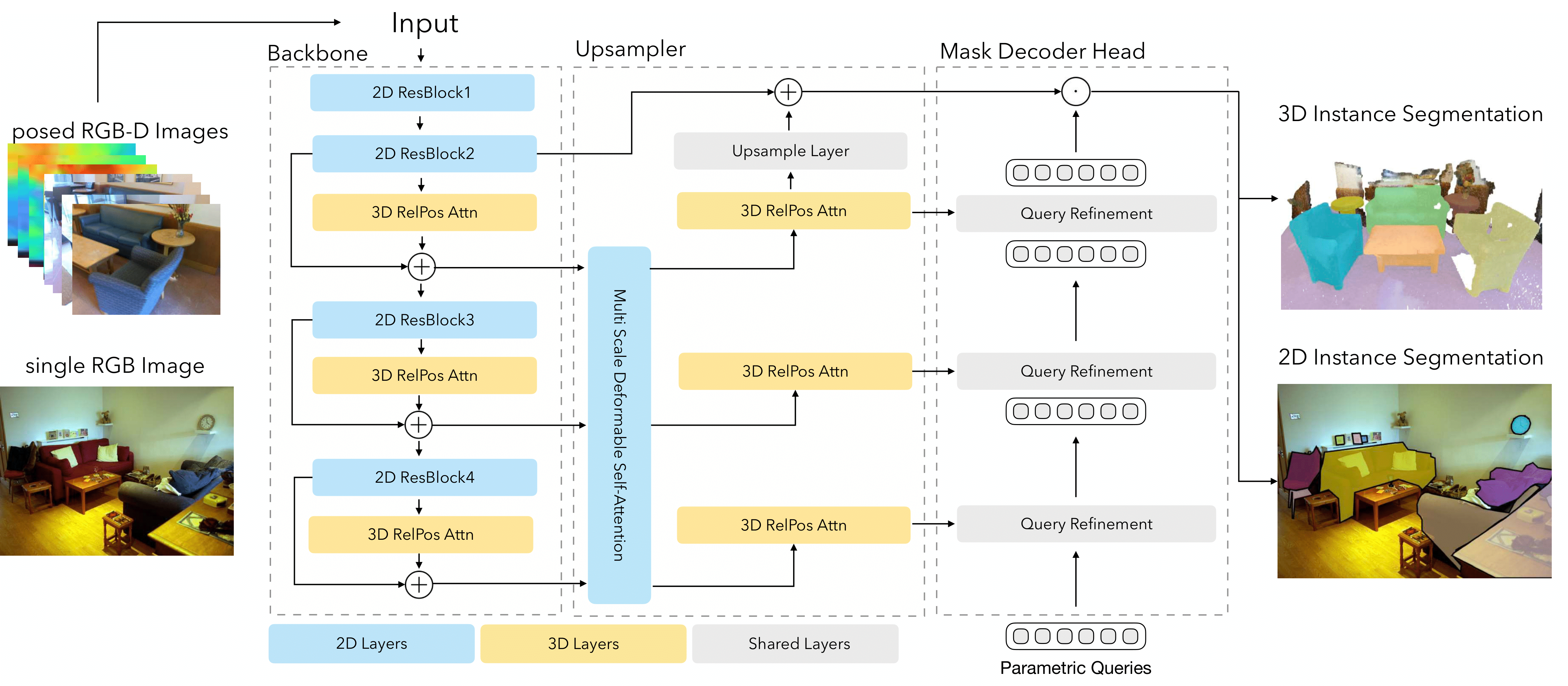

In this paper, we challenge this view and propose ODIN (Omni-Dimensional INstance segmentation), a model that

can segment and label both 2D RGB images and 3D point clouds, using a transformer architecture that alternates between 2D within-view and 3D cross-view information fusion. Our model differentiates 2D and 3D feature operations through the positional encodings of the tokens involved, which capture pixel coordinates for 2D patch tokens and 3D coordinates for 3D feature tokens. ODIN achieves

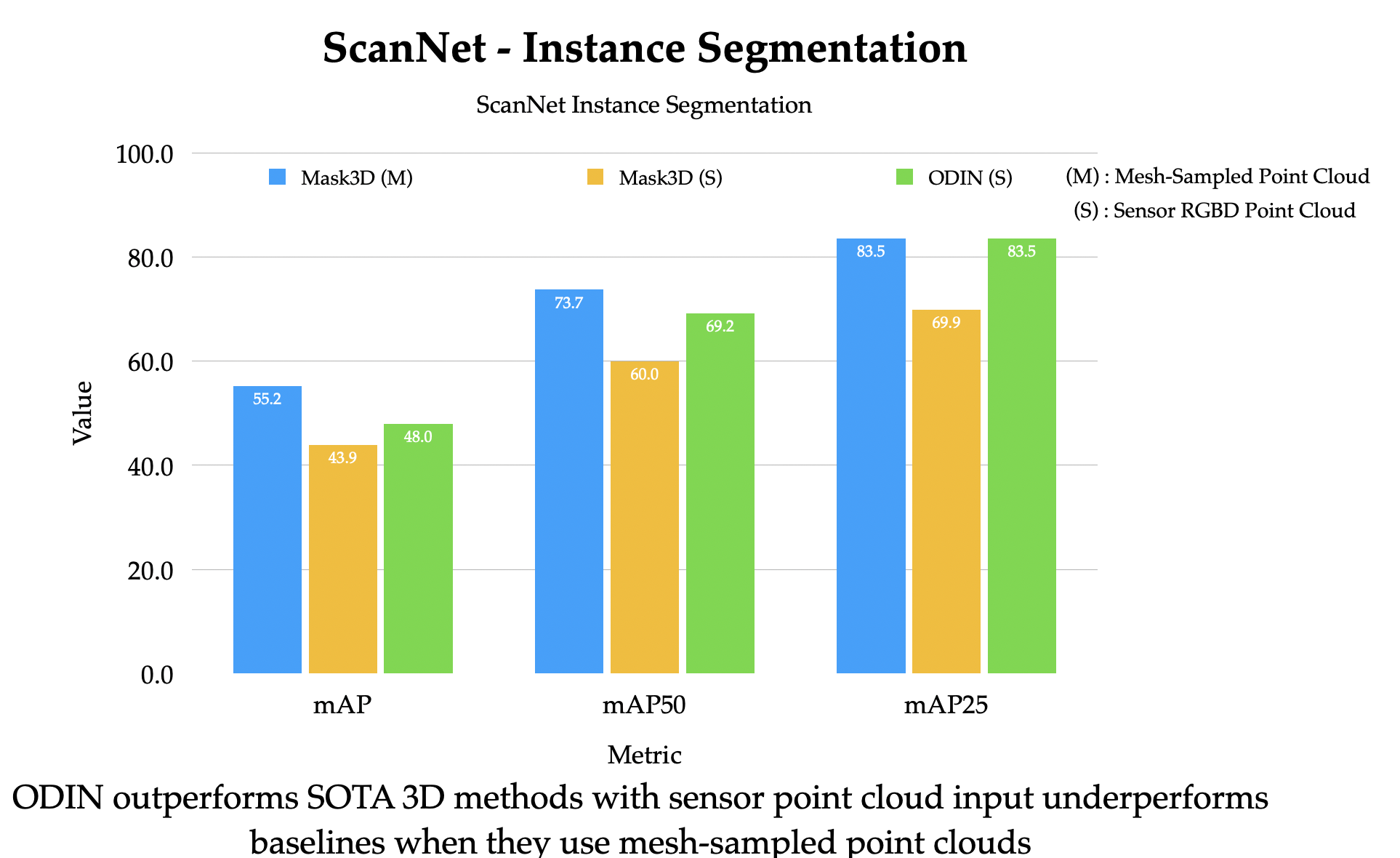

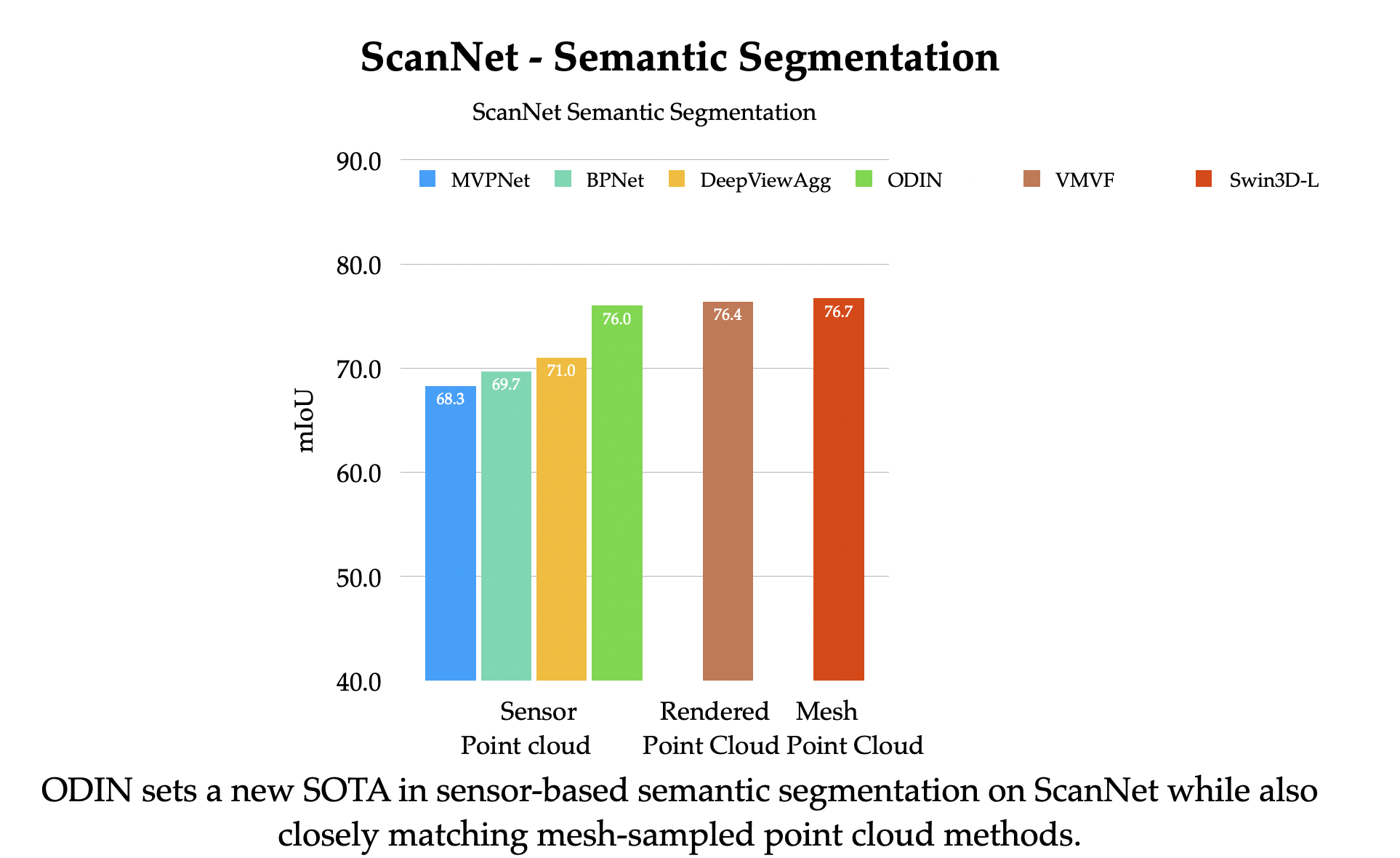

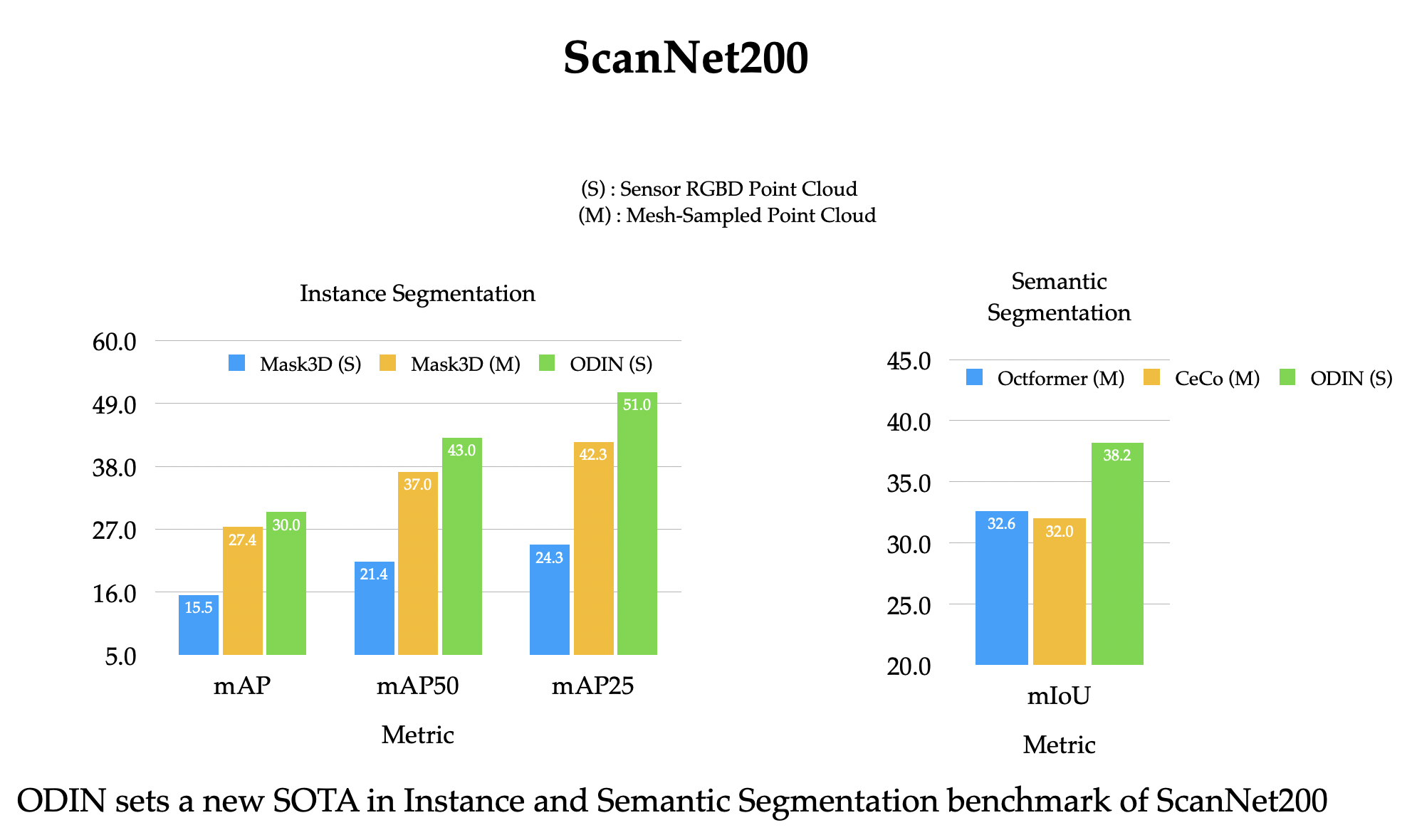

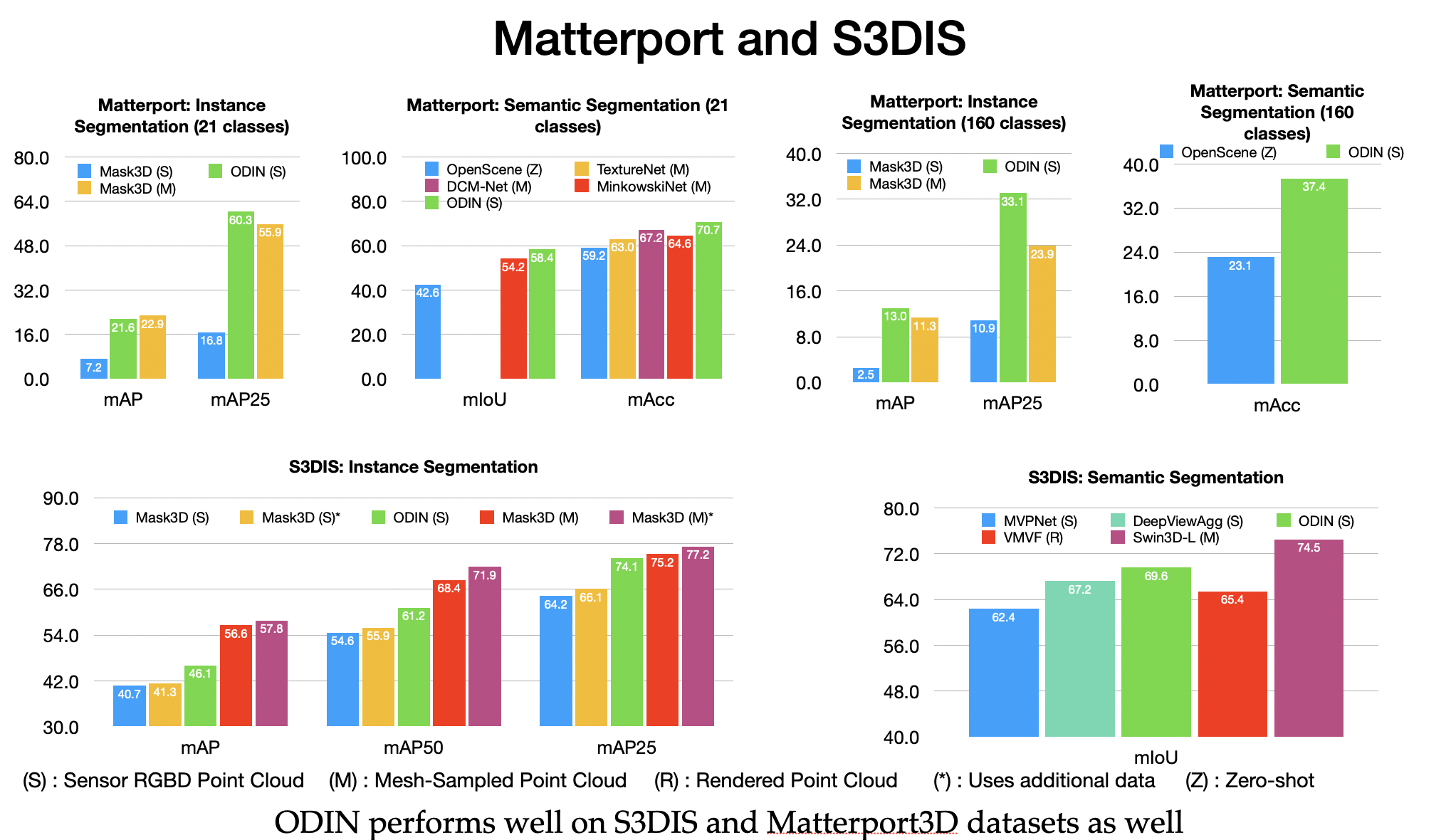

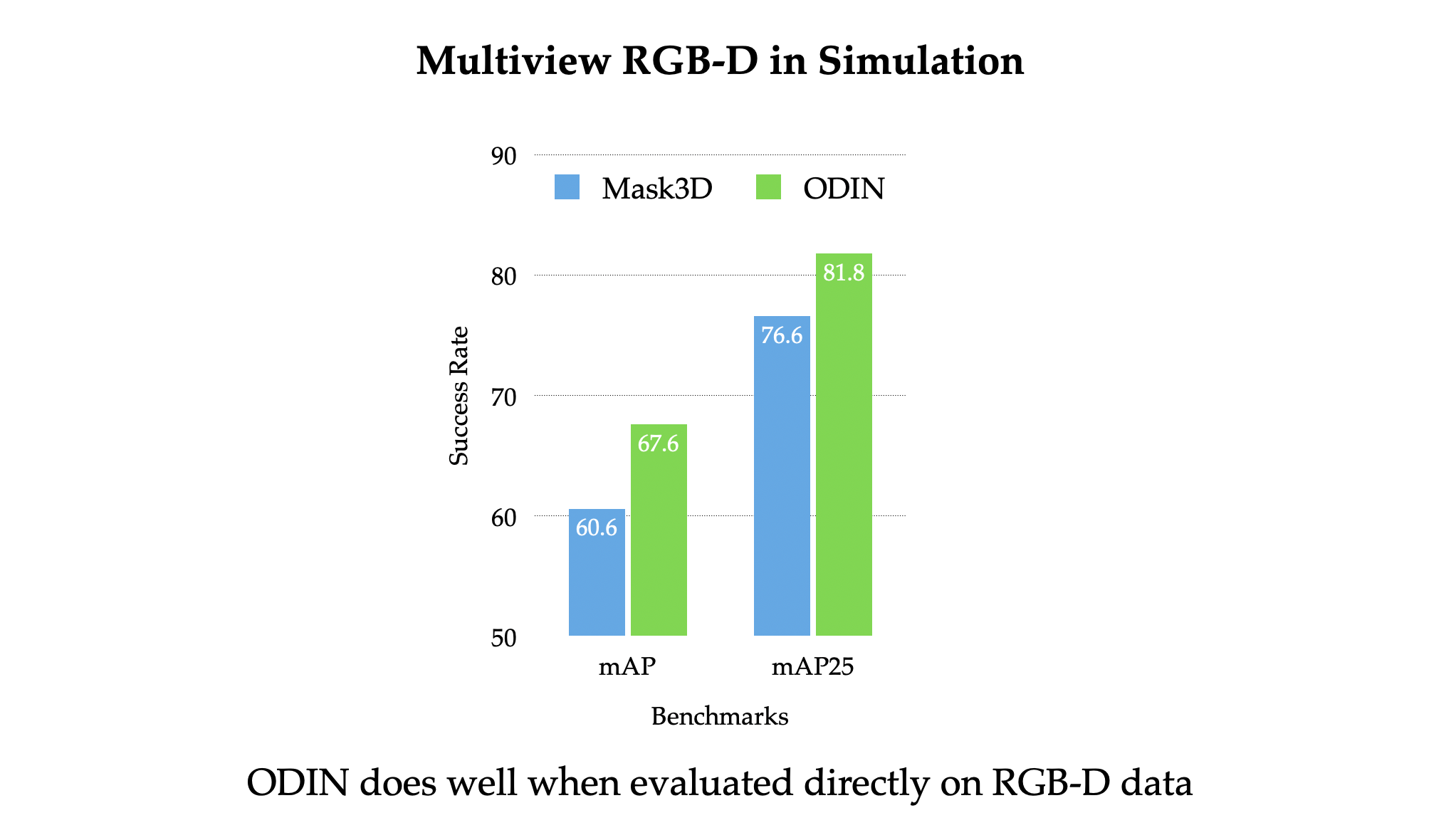

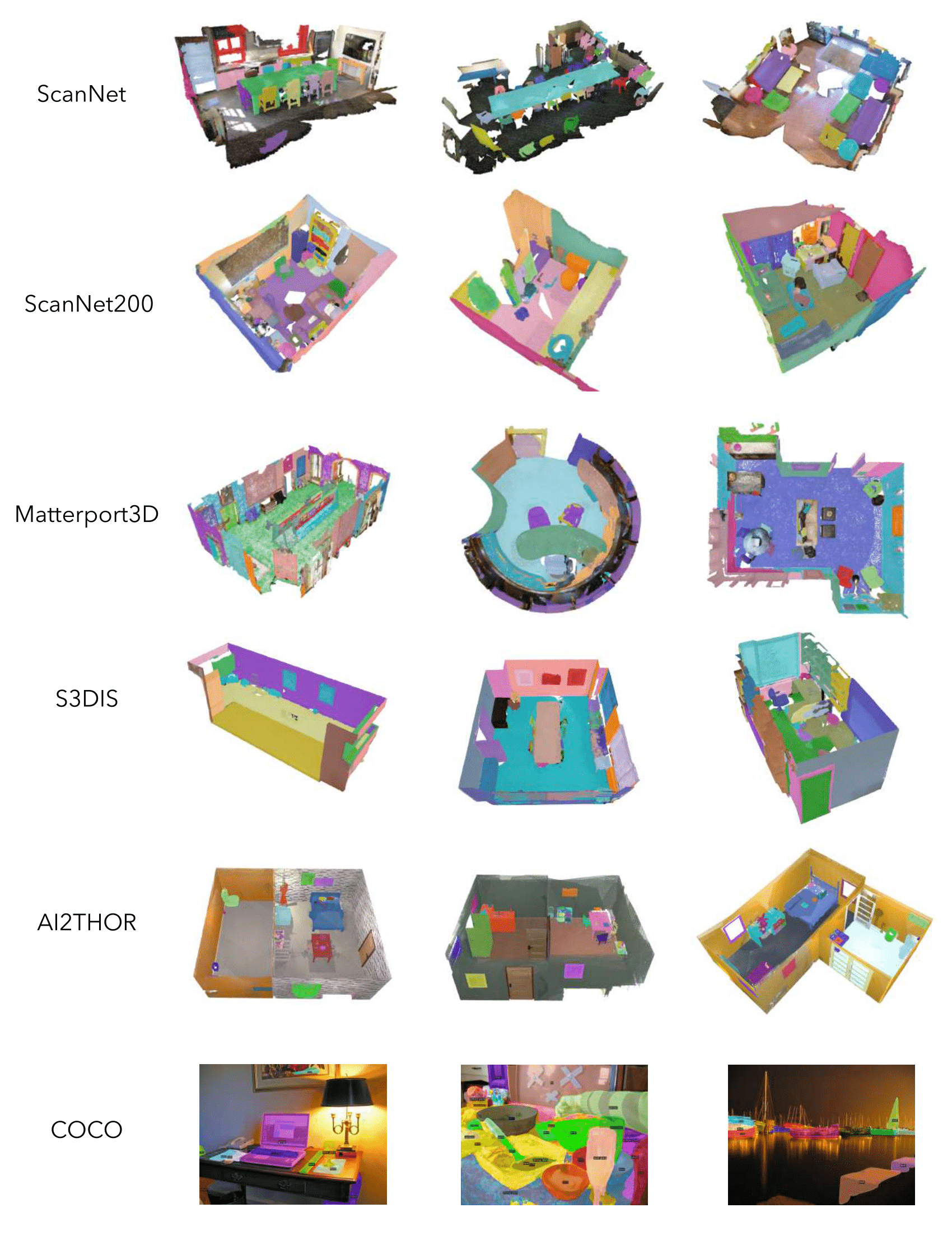

state-of-the-art performance on ScanNet200, Matterport3D and AI2THOR 3D instance segmentation benchmarks, and

competitive performance on ScanNet, S3DIS and COCO. It

outperforms all previous works by a wide margin when the

sensed 3D point cloud is used in place of the point cloud

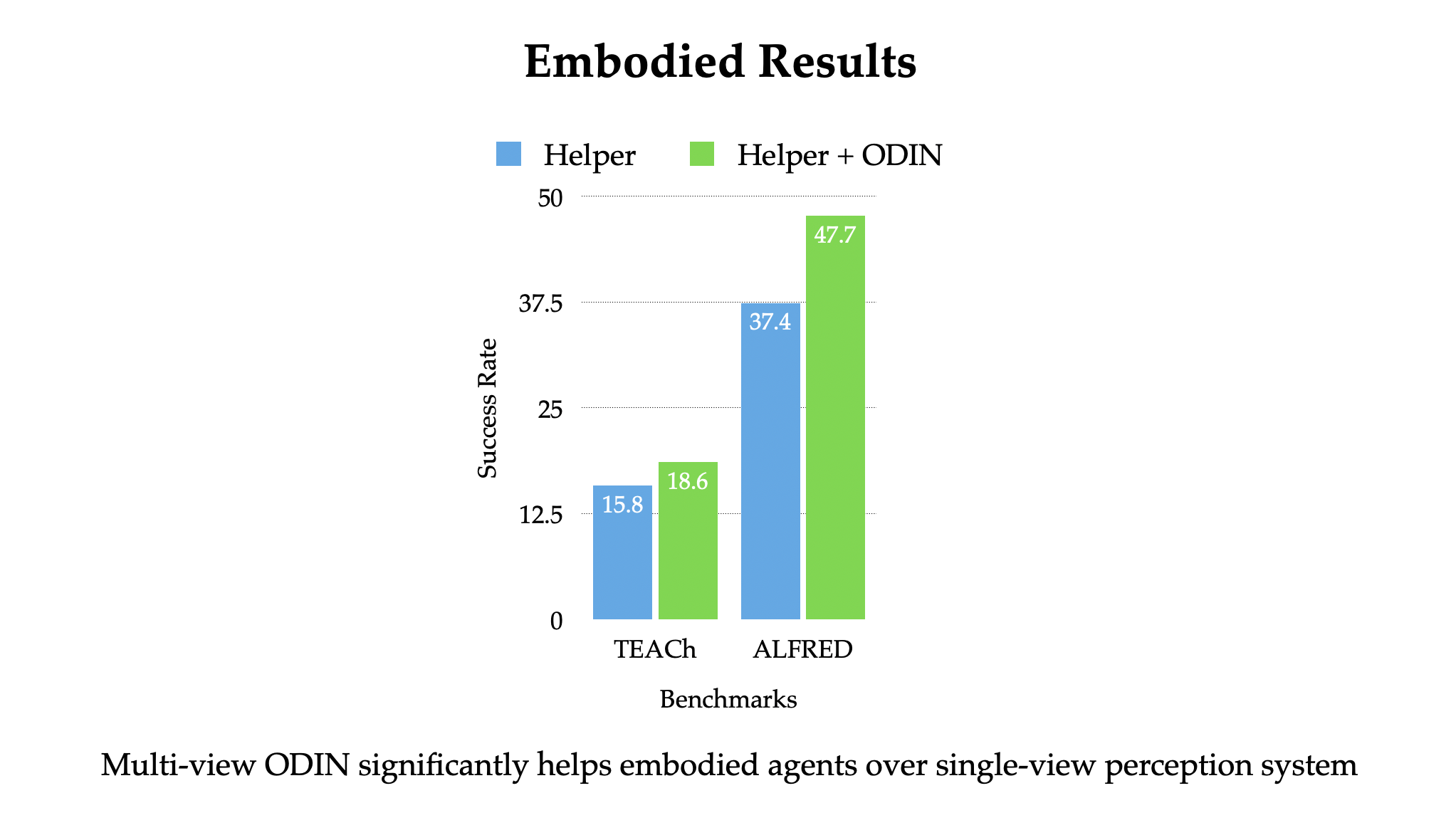

sampled from 3D mesh. When used as the 3D perception engine in an instructable embodied agent architecture, it sets

a new state-of-the-art on the TEACh action-from-dialogue

benchmark.

Method: ODIN

Quantitative Results

ODIN achieves state-of-the-art performance on ScanNet200, Matterport3D and AI2THOR 3D instance segmentation benchmarks, and competitive performance on ScanNet, S3DIS and COCO. When used as the 3D perception engine in an instructable embodied agent architecture, it sets a new state-of-the-art on the TEACh action-from-dialogue benchmark.

Qualitative Results: 2D and 3D Segmentation

ODIN Perception with an Embodied Agent

BibTeX

@misc{jain2024odin,

title={ODIN: A Single Model for 2D and 3D Perception},

author={Ayush Jain and Pushkal Katara and Nikolaos Gkanatsios and Adam W. Harley and Gabriel Sarch and Kriti Aggarwal and Vishrav Chaudhary and Katerina Fragkiadaki},

year={2024},

eprint={2401.02416},

archivePrefix={arXiv},

primaryClass={cs.CV}

}